Nowadays, Machine Learning is all about computers learning by themselves. Machine Learning is not new, in fact, it has been used in the past, including spam filters.

Machine Learning is where computers are able to learn and improve based on input and feedback. This can be anything from stock prices updates to song recommendations.

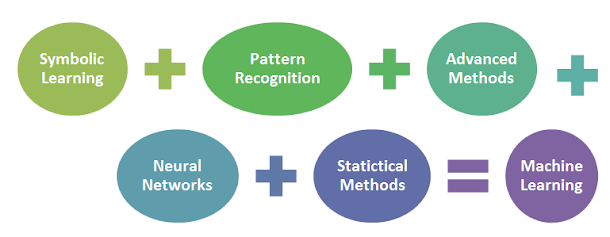

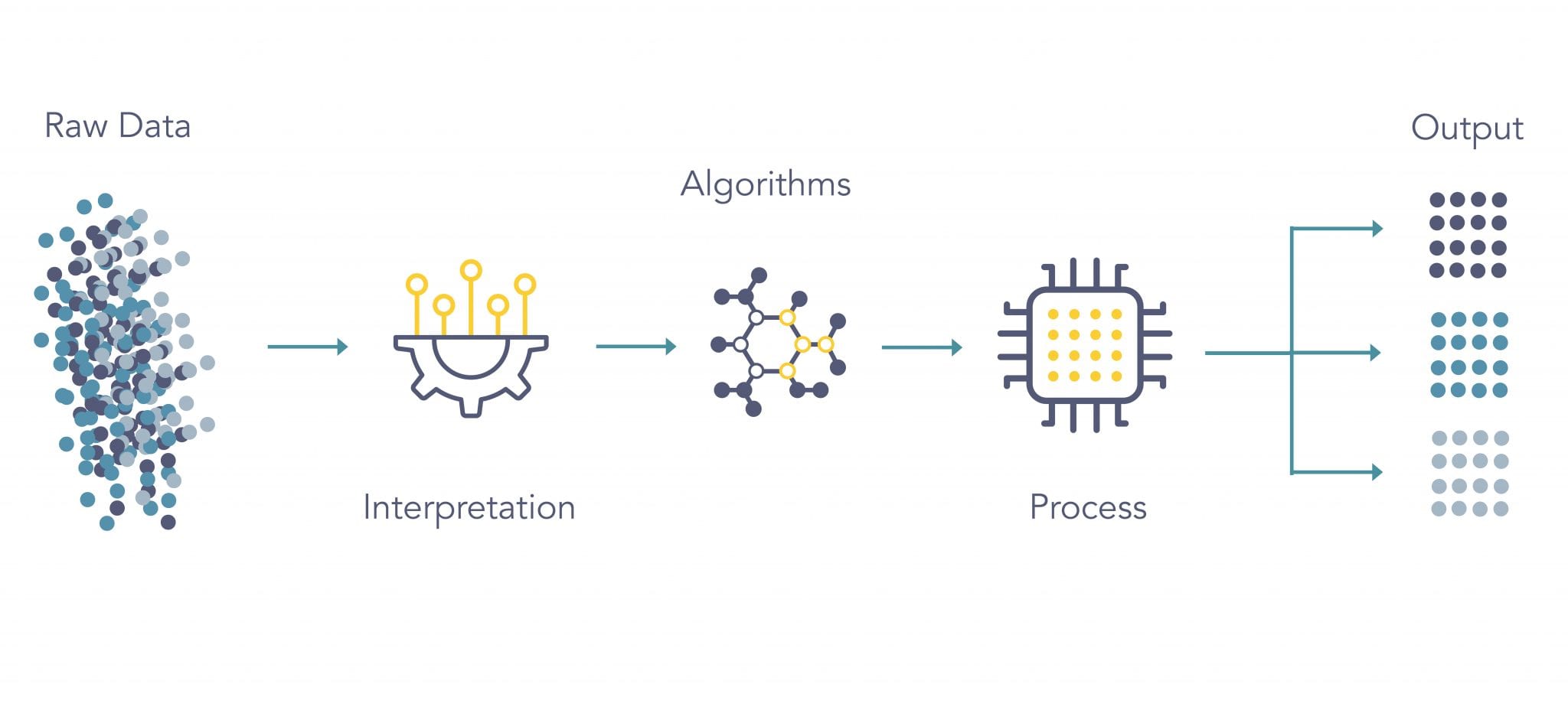

The basic structure of Machine Learning:

– Collect labeled training data

– Create a model using a Machine Learning algorithm (Training)

– Test the model with test data (Validation)

– Apply predictive models to production systems such as trading or fraud detection etc.

So why Machine Learning? People who ask this question would either want to automate repetitive tasks, reduce human bias, or simply computers need more decision-making power than humans. Machine Learning can learn from data and make predictive models with no human intervention.

Machine Learning is constantly improving the results and accuracy because it constantly updates and confirms labels of data, which humans wouldn’t be able to do efficiently. Machine learning algorithms constantly re-evaluate the model by using test and validation data, this way we can continually improve our Machine Learning model.

As Machine Learning technology develops, Machine Learning will become more powerful as computers now become much faster than ever before to compute terabytes or petabytes of data within seconds.

It won’t take long until Machine Learning replaces humans in certain sectors such as voice recognition technologies like Siri, Alexa, etc., driverless cars replacing taxi drivers, and probably even lawyers!

Machine learning has been used in different sectors involving data analysis, but Machine Learning is also used within the realm of trading and financial markets. Machine learning algorithm identifies patterns in historical trading data.

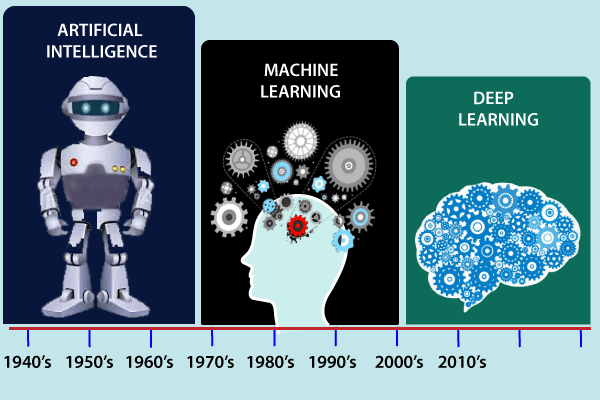

The History of Machine Learning

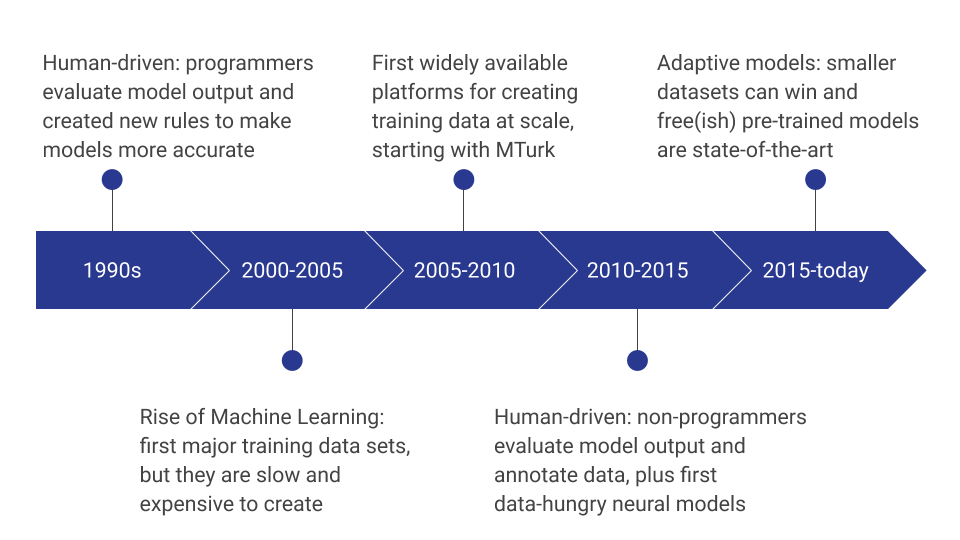

The term Machine Learning was coined in 1959 by Arthur Samuel, who used it to describe what he was doing at IBM. Machine Learning got its roots from work done with anti-aircraft systems used during World War II that were designed to automatically shoot down enemy planes.

These systems recorded information about each flight and then based their future actions on previous events, creating algorithms for making decisions under uncertainty – this is the first instance of Machine Learning technology being implemented using statistics and probability theory.

Samuel moved machines forward in 1956 to begin Machine Learning research. Machine learning gained its foothold in academia until 1980 when DARPA began focusing on Machine Learning as part of their Artificial Intelligence program. Machine Learning was created with the goal of helping computers learn how to perform some tasks without human intervention, and Machine Learning has come a long way since then.

The first decade of Machine learning started with Alan Turing releasing his paper “Computing Machinery and Intelligence” which proposed an experiment now known as the Turing test where a computer is asked questions attempting to determine if it is human or not – essentially AI.

The first general-purpose Machine Learning algorithm for performing regression, called the perceptron rule by Rosenblatt, came out of this decade as well (1958).

Machine Learning became popular in the 1960s, and Geoff Hinton was able to build machine learning systems that could learn to recognize handwritten characters with only a few examples. Machine Learning became more prominent than ever as AI research focused on Machine Learning, such as work done by Marvin Minsky and Seymour Papert at MIT, who came up with LISP Machine – an architecture for machine learning machines called cellular neural networks.

These neural networks were first used to solve problems where knowledge is acquired over time through experience rather than being explicitly programmed.

The first rule-based Machine Learning system capable of learning to play chess was built by Arthur Samuel in 1959 using Bayesian updating, which calculated the probability of two possible moves given all previous information about other possible choices made by the Machine.

Machine learning was then able to achieve parity with expert human players in this decade, but more importantly, Machine Learning gave computers the ability to learn without explicit programming and thus opened Machine learning up to machine learning research to become a field of its own rather than just an offshoot of AI research.

During this same time Perceptrons, which were modeled after neural networks and had been put forward as a possible architecture for machine learning systems in the earlier ’50s, were shown by Marvin Minsky and Seymour Papert not to work – essentially ruining the prospects of using neural networks for Machine Learning at least through perceptrons.

The 1970s started with researchers abandoning Neural Networks due to their inherent shortcomings such as vanishing gradient problems and unstable training algorithms, Machine Learning researchers began focusing on Machine learning using statistical methods instead of Neural Networks.

Machine Learning became popular in this decade as Machine Learning technology was now well established and Machine Learning research gained its own field within Computer Science rather than being overshadowed by AI research.

Machine Learning algorithms were widely used for many applications such as the expert systems that could now be used to help doctors make difficult decisions, like whether to operate on a patient – these machines could be trained over time with past results to recognize patterns or detect anomalies, making their actions more accurate than those made based solely off experience.

Roger Schank created the conceptual dependency model which revolutionized machine learning’s approach to understanding information containing concepts and how they are related, leading to the development of his Non-monotonic reasoning Machine Learning system. Machine Learning algorithms were also used to detect credit card fraud, adjust insurance premiums, and even make predictions on baseball.

The Future of Machine Learning

Artificial Neural Networks are being researched for their promise in the Future of Machine Learning.

It is well known that Artificial Neural Networks use brain-inspired structures to learn, which produce results comparable to those of humans.

The Future of Machine Learning has seen systems designed with Artificial Neural Networks take part in many competitions and applications. These include systems that learn how to navigate difficult terrains, classify galaxies based on their shape, even play video games.

They have been heralded as a huge leap forward towards Artificial Intelligence by some enthusiasts. But what is really behind this trend? And what are the reasons for its Future development?

The Future development of Machine Learning will be heavily influenced by two key factors: computational power increase and adaptation methods decrease.

Computational power will provide the necessary means of exploring Machine Learning systems that require large amounts of resources. With an ever-expanding demand for such systems, it is likely that resources will be available in the future.

Adaptation methods decrease refers to how these models learn. The Future approach would be to use more complex models with nonlinear elements, which can model highly nonlinear problems Future of Machine Learning with Future approaches and Future data (which doesn’t exist yet and needs to be gathered), but requires a lot more computational resources than traditional methods like Support Vector Machines.

These two key factors support each other: the increasing availability of computational resources allows research into new Machine Learning adaptations; this drives interest in gathering Future datasets needed by these new methods, and so on.

Modern Machine Learning methods depend heavily on the availability of sufficient computational resources in the Future of Machine Learning, as well as datasets.

An increase in these two factors will push forward research into new Machine Learning Future approaches and developments, which could result in a wide variety of applications possible, such as real-time translation between languages.

Editor Recommendation:

>What is Virtual Reality (VR)? Explained

>What is Augmented Reality (AR)? Explained

>What is Mixed Reality (MR)? Explained

> What is Edge Computing? Explained

This post was originally published on 30, November 2021, but according to new information stuff, this post is updated frequently.